You are a software engineer working on an AI-powered product, and you’ve found yourself in a situation where your product team needs to adjust the wording on some of the prompts that have been hardcoded into the codebase. Maybe you are working on a smaller projects with a single client who wants a bit more control over the final wording of the prompts. The solution is to move the prompts out of your source code and into a dedicated prompt management system so that other people can work on them.

In this post I’m going to take a look at different prompt management systems available on the market today and review how easy they were to set up.

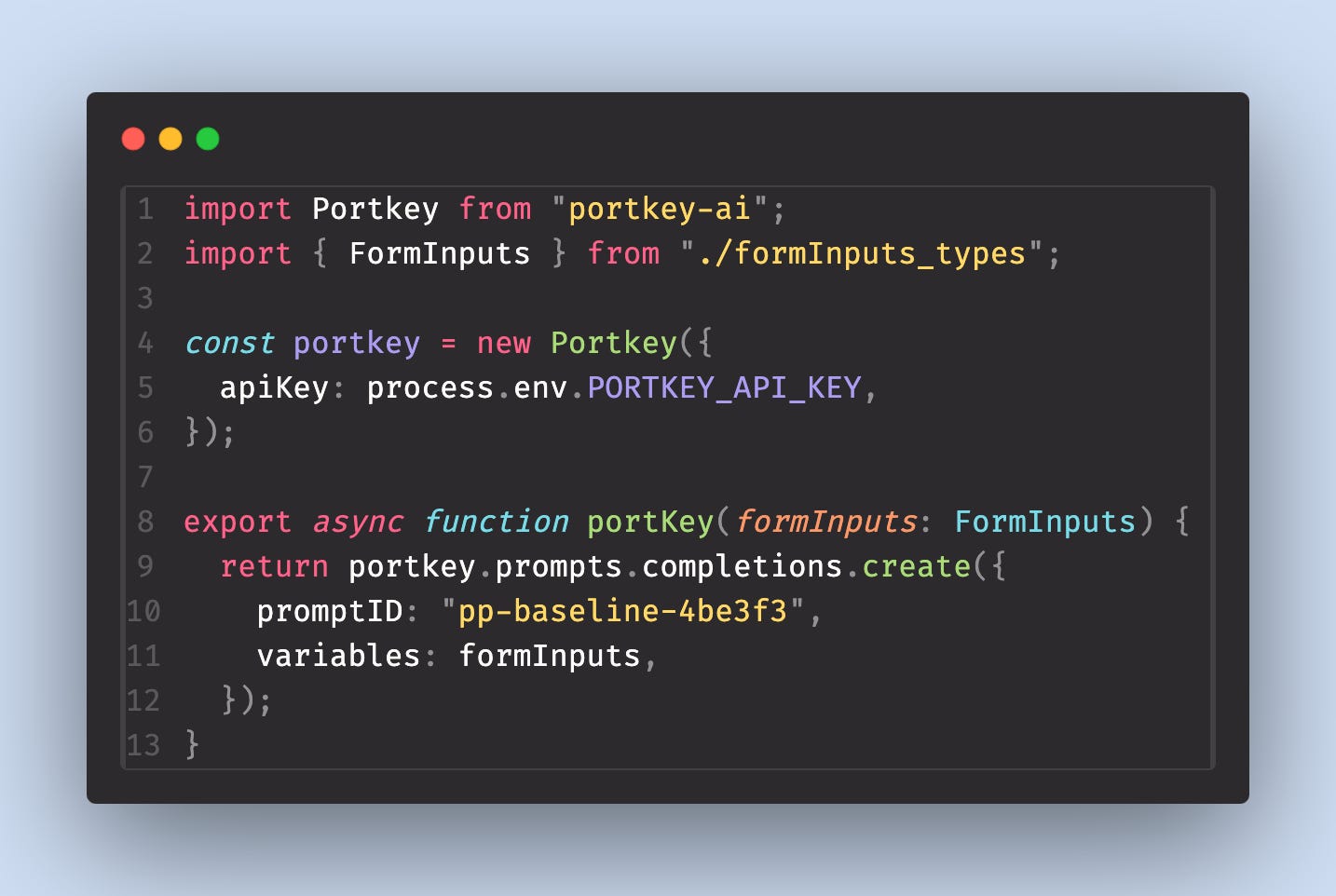

For context, the app I’m going to try and set up was written in Typescript. I’m using Next.js so it’s javascript on the front and back end. Everything connected to AI in my app is contained in the file below. The file exports a single function that I then call in the app. For demonstration purposes, this is as close to the textbook example for Chat completion form the open AI documentation as I could get. The only difference is that I’m setting the response format to JSON.

Setting Up Portkey

I’m going to run through PortKey first because their support team got back to me the fastest and this was the first tool I managed to get working.

Swapping the OpenAI implementation for PortKey’s was fairly straight forward. There’s an npm package you install, you get an API key and then you use their javascript client that works a lot like OpenAI’s (except that you reference a prompt ID for a prompt you created on their dashboard)

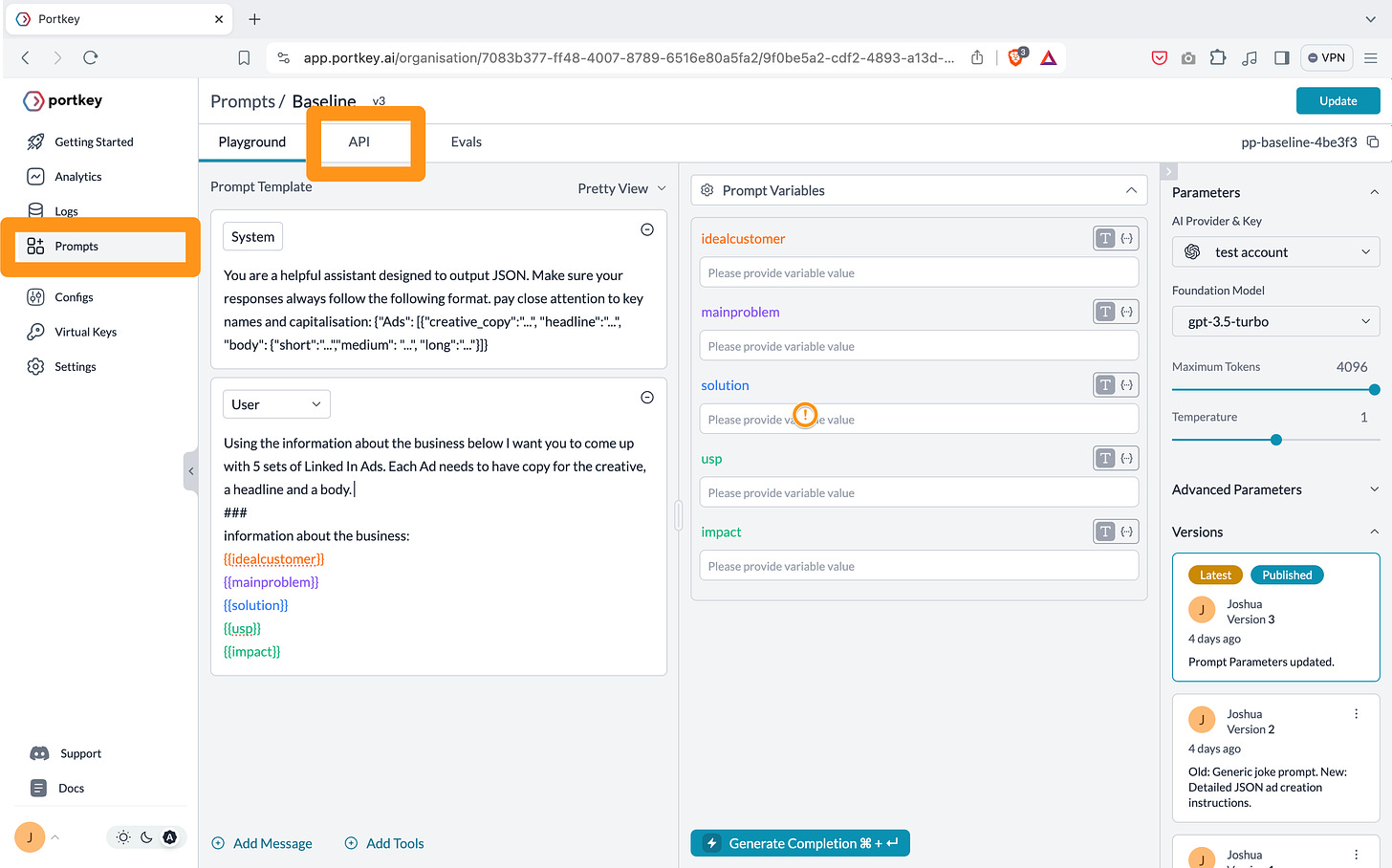

There’s a ‘Prompts’ tab on the left of their app with a playground for creating prompts. Using the playground requires adding your own OpenAI keys to the playground as ‘virtual keys’. When you publish your prompts an API tab will appear at the top of the screen and this will contain the api and implementation detail that you can copy and paste.

Portkey gives you 10K requests per month on their free plan and then costs $99 a month for up to 1 million requests a month.

On the plus side, PortKey has a Node, Python and Curl implementation. This was also platform was the only prompt manager I tried that allowed me to set the response format to json.

On the other hand, the reason I couldn’t get this working on the first try is because all of the API implementation information only get revealed after you publish a prompt. It would have been clearer if the same information was available in their documentation so that I can see show everything works before committing to the app.

Getting ManagePrompt to Work

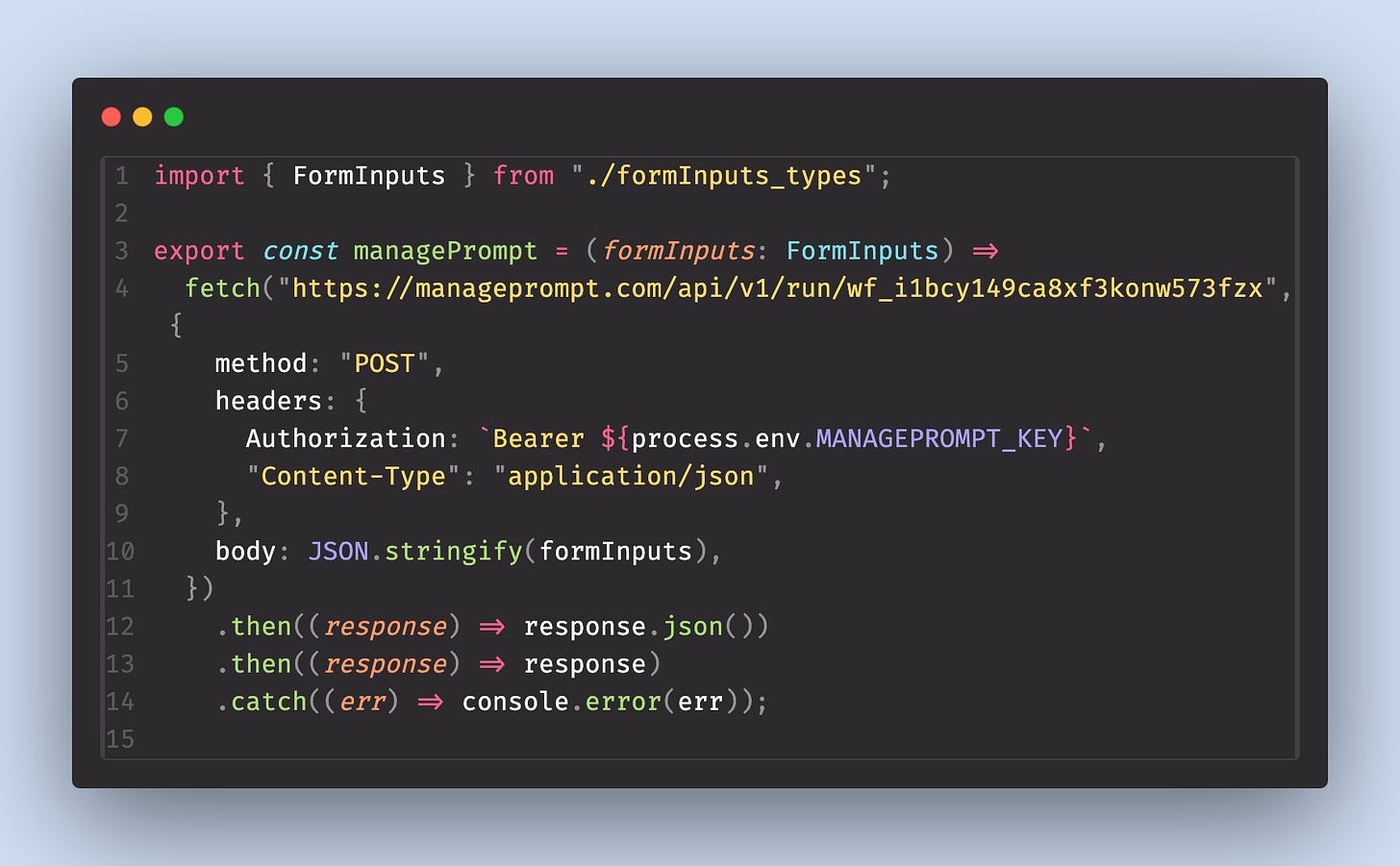

The ManagePrompt documentation has a node client but I could not get it to work despite speaking to their support team. However they also let you access prompts with a POST request so I used that instead.

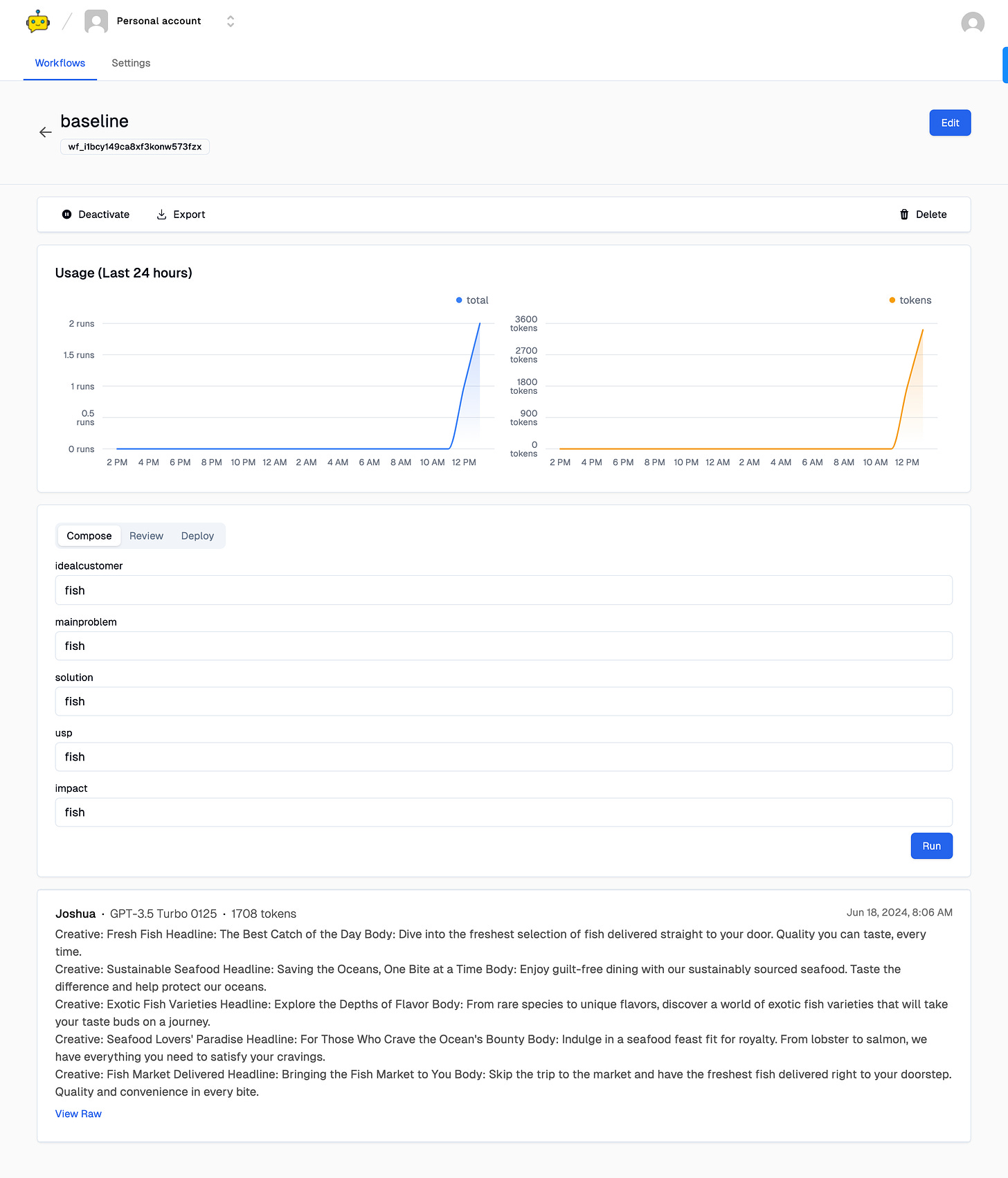

In ManagePrompt each prompt is called a ‘workflow’. Unfortunately I was unable to set the system prompt on any of the option, nor was I able to change the response format to JSON so I couldn’t use this tool in the end.

When published each workflow gives you a separate URL you can call with a POST request that returns the text completion as a string. You also get some basic analytics and a mini playground where you can test the prompt with different inputs.

ManagePrompt’s pricing model comes in a $0.1 for 1K tokens. You never share you OpenAI keys with ManagePrompt, they cover your production calls and the playground calls. However, they have several different models you can choose form and several different LLM providers so I don’t think charging a single price for tokens regardless of the model is fair.

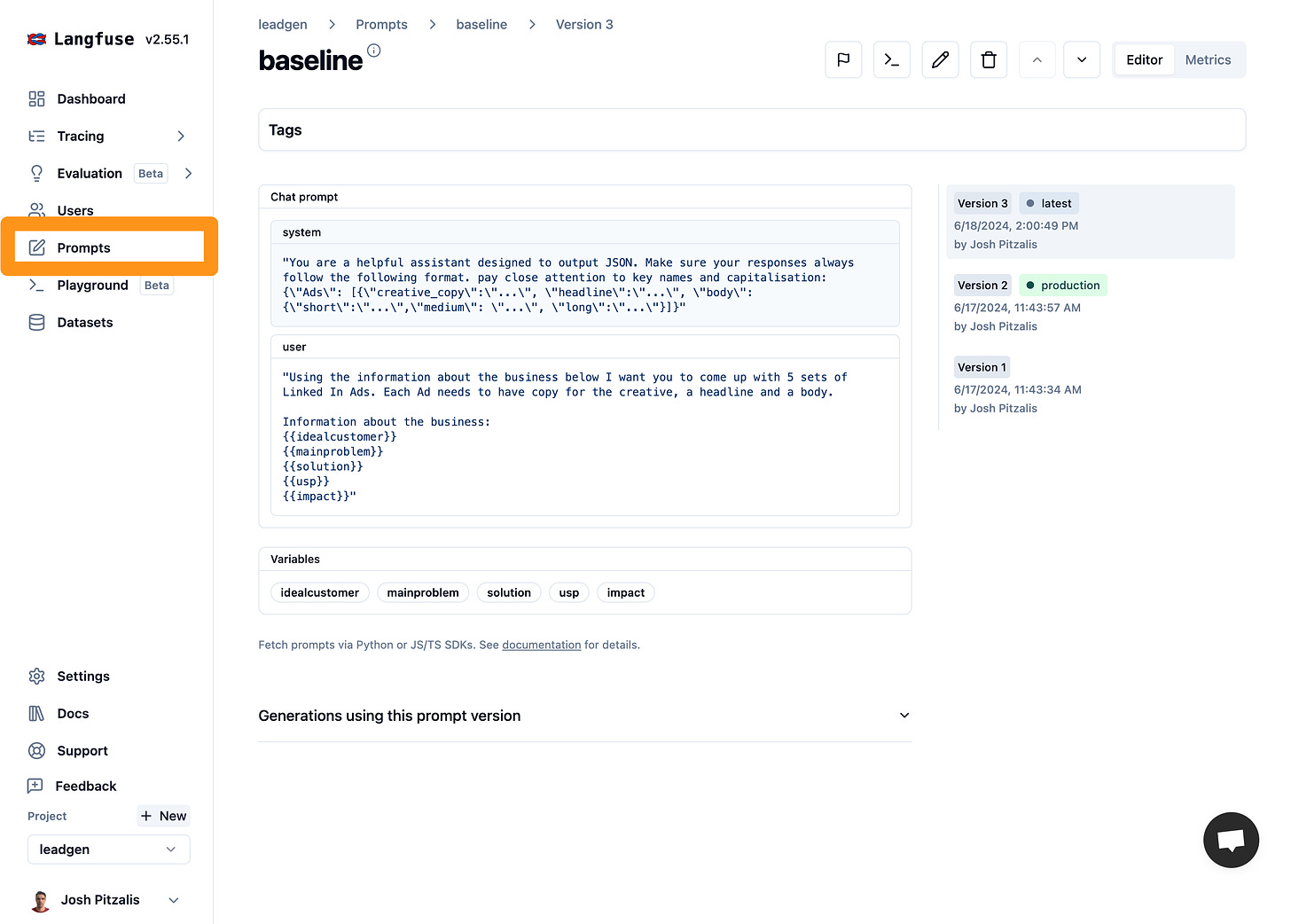

Configuring LangFuse

Langfuse took a quite different approach to prompt management from the other examples. Langfuse does not call OpenAI directly. They only store your prompt text. Calling Langfuse will return your prompt text, which you then have to pass into OpenAI or any other LLM you have implemented.

Personally I found the Langfuse app experience a bit technical and overwhelming. The app is focused on providing analytics for your LLM calls so prompt management feels like more of an additional feature. They is a ‘Prompts’ tab on the left side panel of their app that lets you store as many prompts as you want.

They don’t have a playground in the prompt manager but they do have a separate tab with a playground that you can add your own OpenAI keys to.

On the upside, since Langfuse is focused on Analytics their pricing model doesn’t cover calling prompts and as I understand it the product is free if you’re just using it as a prompt manager.

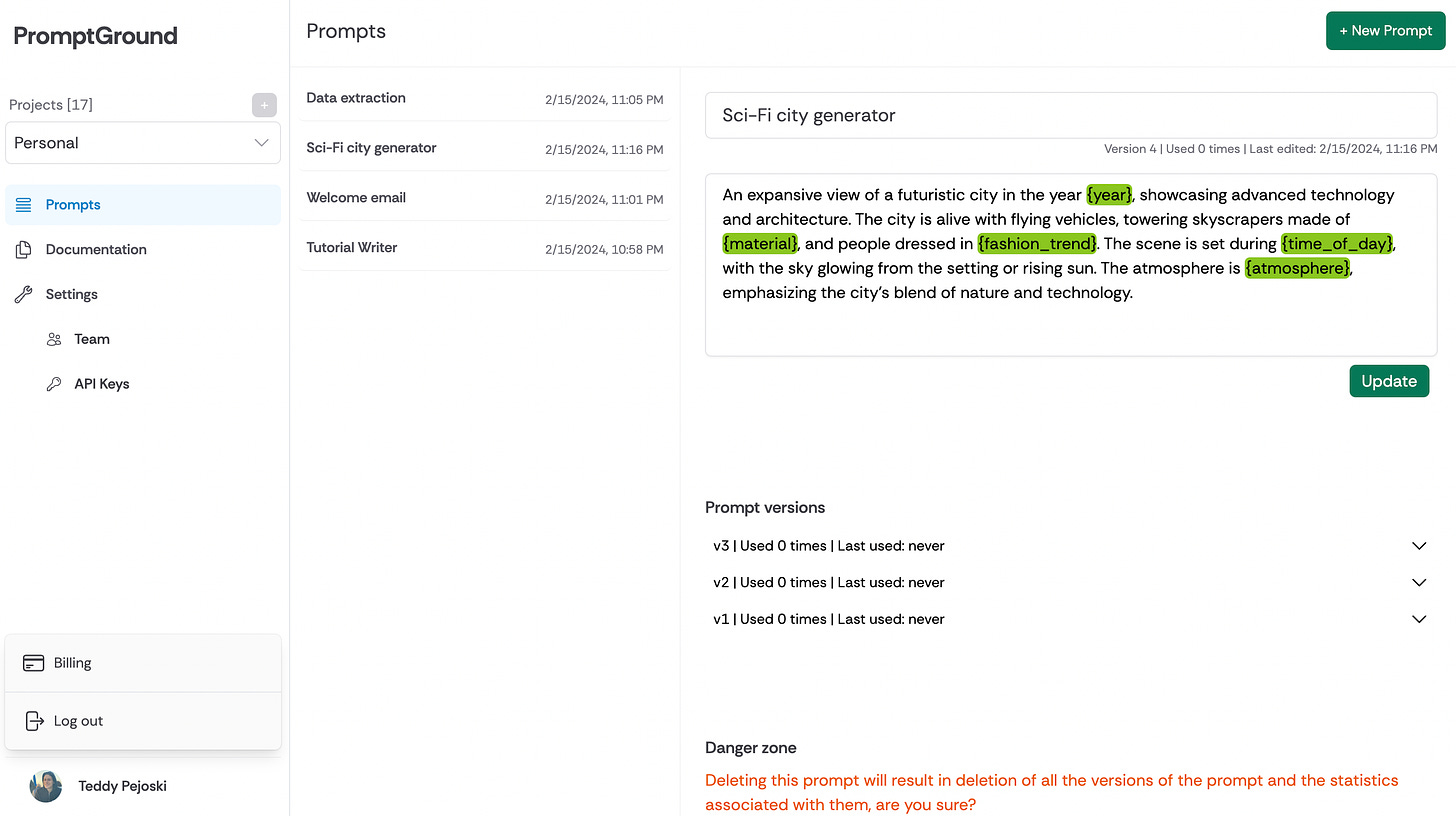

Promptground is another prompt manager that took the same approach as Langfuse where they just return prompt text and never call OpenAI directly. Their interface was much simpler to understand.

Despite being relatively easy to setup, I decided not to use Promptground because I couldn’t contact anyone to ask questions about the app. There were no support contact details in the app, the sign up email was no-reply, and Terms of Service and Privacy Notice on the sign up page were anchor links. Understandably, I did not feel comfortable storing my prompts on their system.

Setting Up PromptLayer 🍰

Once you have set your prompt up in PromptLayer, you will an API key form their settings page, and then you implement it in your codebase like so:

This is a Typescript implementation. They also have a Python, LangChain and Rest API implementation. This is a view of what you get in PromptLayer when managing an individual prompt in their registry.

Agenta AI SetUp

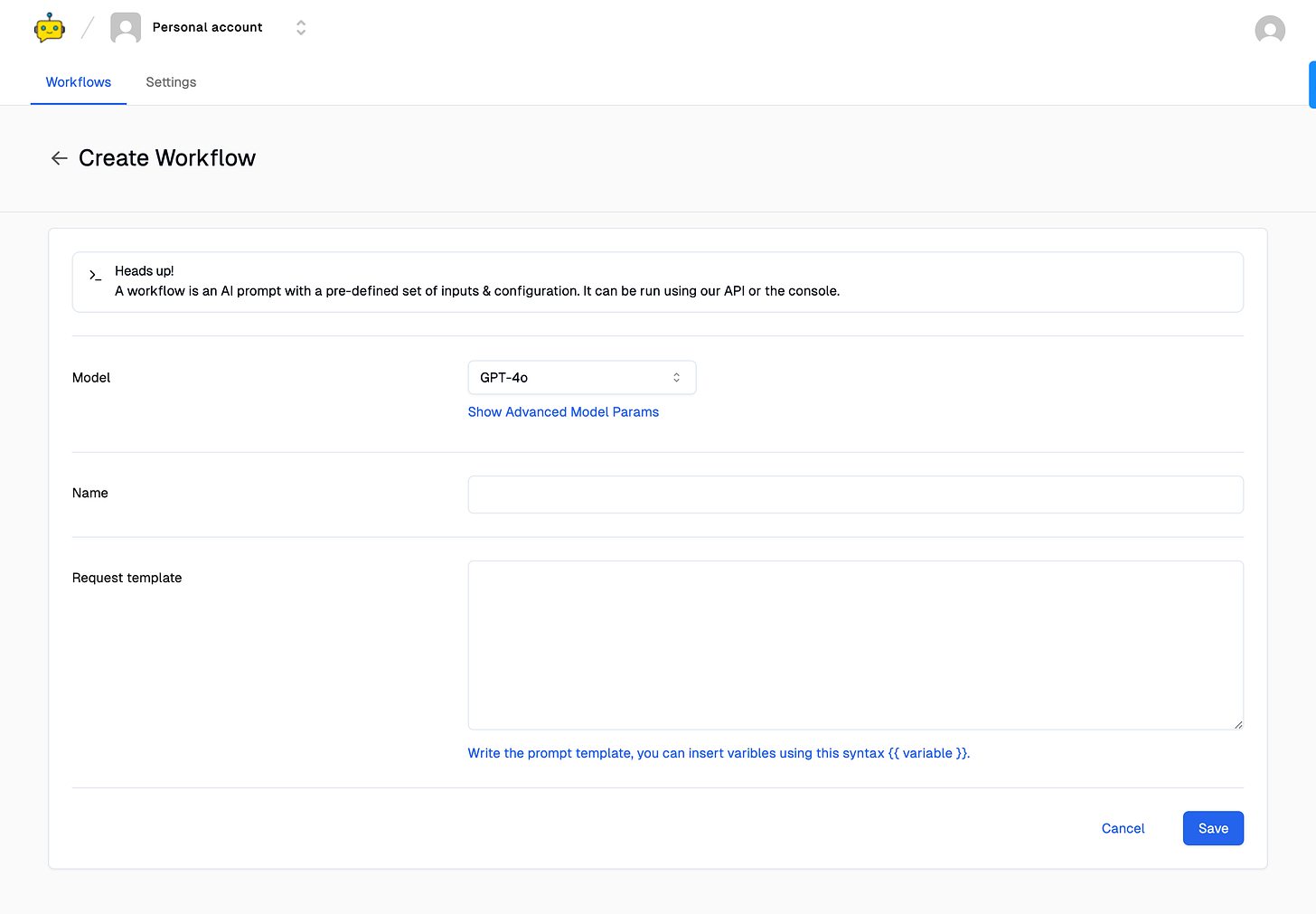

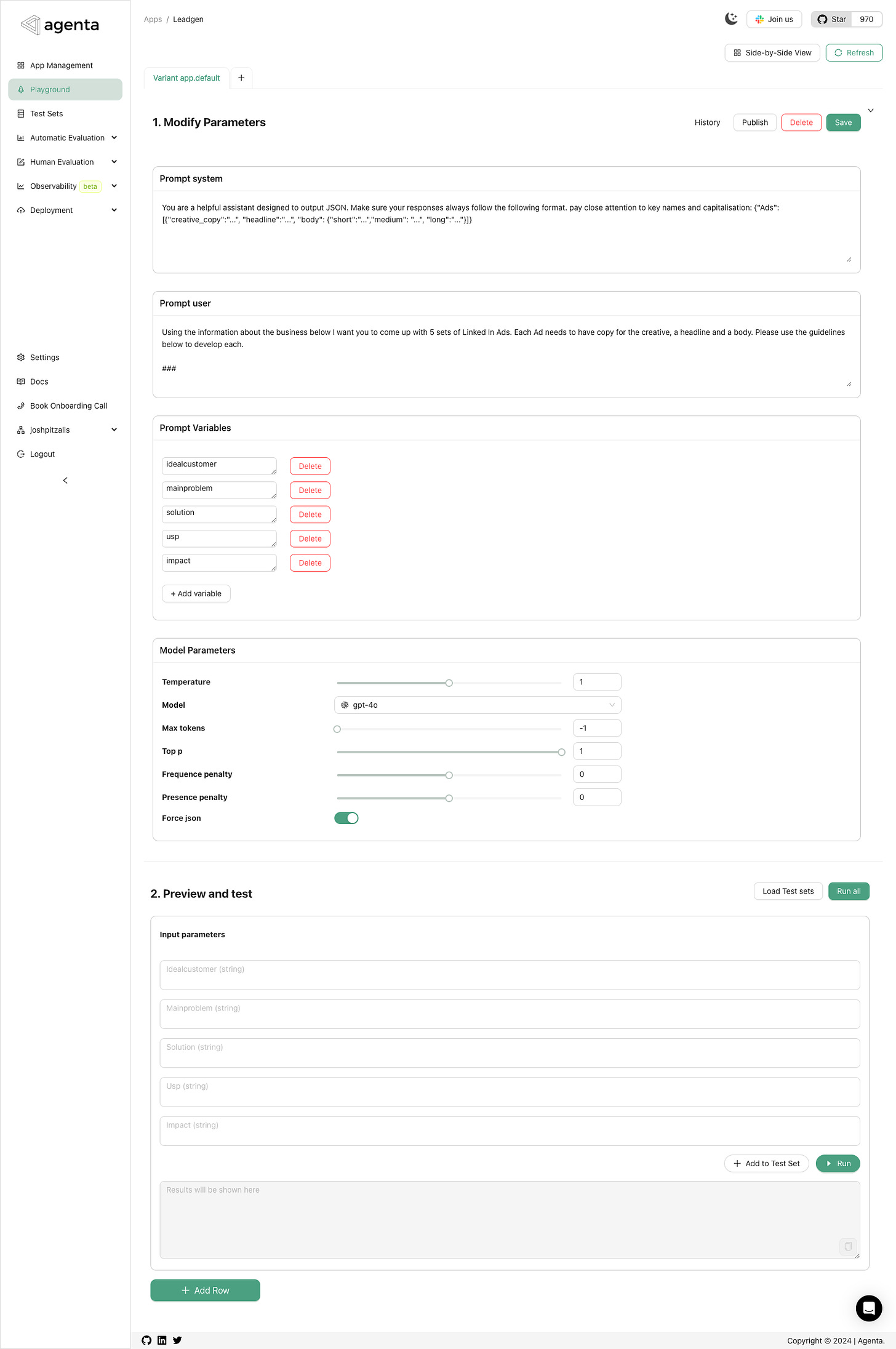

Once you sign up to Agenta and create a new project your dashboard for creating a new prompt looks like this.

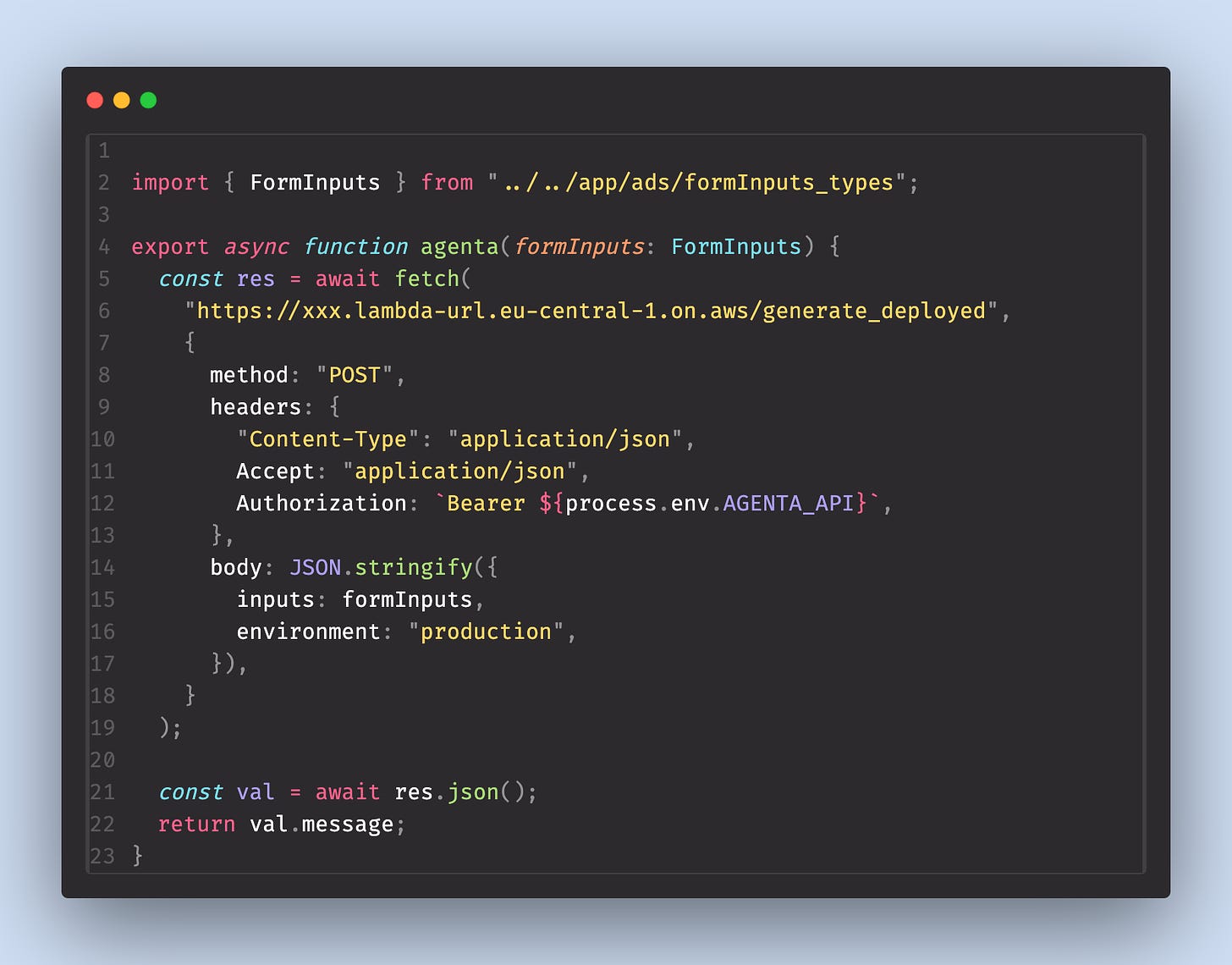

When you publish your prompt a new endpoint will appear under the ‘Deployments’ tab on the left hand panel. This endpoint tab will have some implementation code that you can copy directly into your project. You get a choice or Python, Typescript or cURL implementations. The Typescript implementation relied on axios, which I didn’t want to add to my project so I just rewrote my own implementation below.

There is no Agenta client, they simply publish your prompt to an http endpoint. At the time of writing this the ends point are unsecured, so adding the API key is kind of redundant. I have spoken to their support team, who have been tremendously helpful as I was setting everything up, and they have assured me that they are releasing a secure version of their endpoints.

The JSON response form this endpoint is an object with a message field that contain your response form the LLM, as well as fields for token usage, cost and latency information. So if you’re used to working with OpenAI’s API and pull the choices[0].message.content value from the completion then you don’t have to do that anymore.

Agenta’s endpoints work with their own default LLM keys out of the box for easy setup. You can replace these with your own keys once you are ready to use the endpoints in production.

Conclusion

After exploring various prompt management systems, it’s clear that each offers unique features and approaches to address the challenge of managing AI prompts outside of source code. While some platforms like PortKey and PromptLayer provide comprehensive solutions with built-in OpenAI integration, others like LangFuse and Promptground focus on prompt storage and analytics.

Key takeaways:

- PortKey offers a user-friendly interface with JSON response formatting but requires prompt publishing for full API details.

- ManagePrompt provides basic analytics and a mini playground but lacks system prompt customization.

- LangFuse and Promptground focus on prompt storage and analytics without direct LLM integration.

- PromptLayer and Agenta AI offer straightforward implementations with various language support.