In this post, I’m looking into the error-handling capabilities of four leading prompt management solutions, which are among the best prompt engineering tools available: PortKey, Agenta AI, LangFuse, and PromptLayer.

I’m going to simulate 5 real-world scenarios that are likely to occur if you’re working on a product with non-technical contributors working on a set of prompts via a prompt manager.

- A change the shape of the payload

- Sending too much information in a request

- Not sending enough information

- Handling incomplete JSON responses

- Needing to rollback to a previous version of a prompt

Changing the shape of the payload with prompt engineering tools ❌

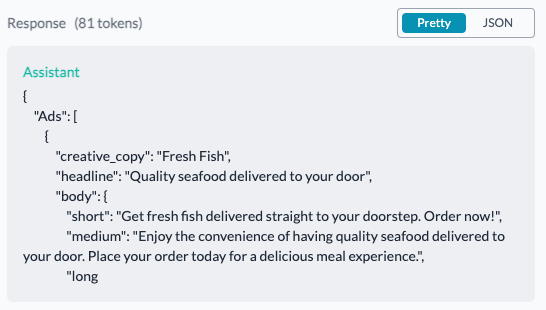

The first prompt engineering tool I looked at was PromptLayer. This is the dashboard for managing an individual prompt in PromptLayer. PromptLayer provides a small response preview at the bottom of each individual prompt’s dashboard page. This functions as a type of debugger.

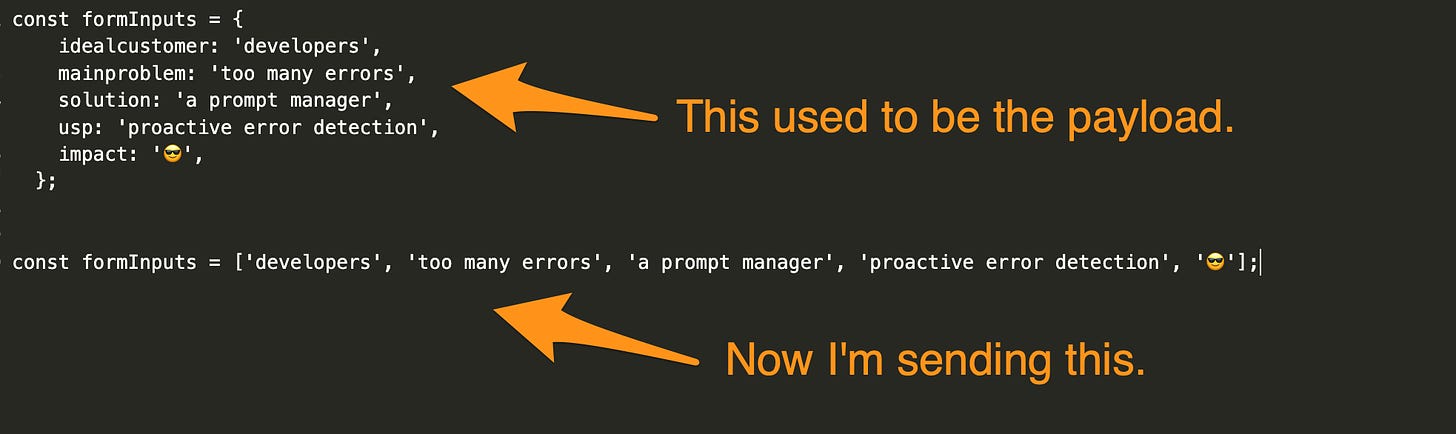

The form inputs I send to PromptLayer are sent as an object with five keys, all strings. So I changed the shape of this payload to an array with the value to see what would happen.

To be clear, I’m not expecting this to work. What I want to understand is how it handles these kind of catastrophic changes.

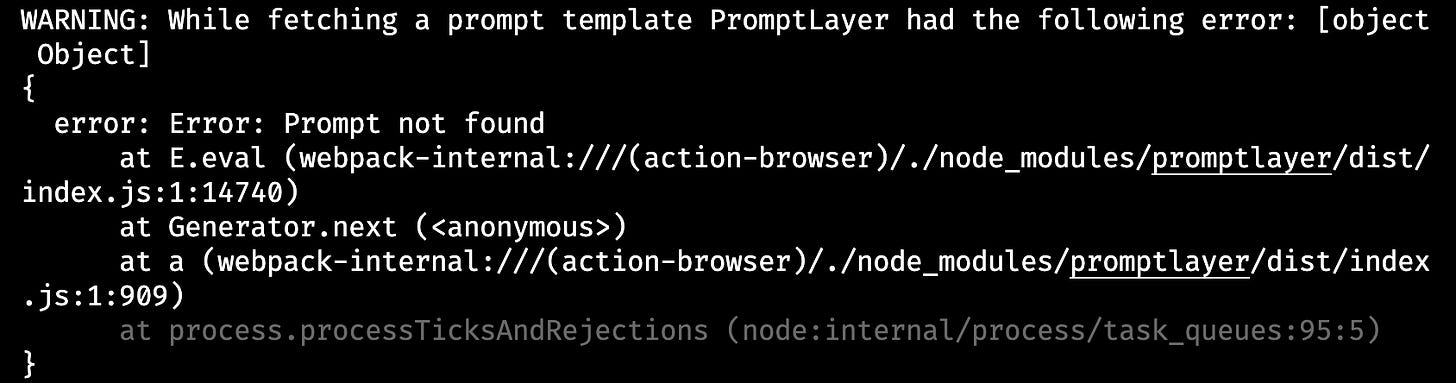

What I got was an error in my console saying “prompt not found”. The fact that it threw an error is great, but it wasn’t the most helpful error since the prompt didn’t change, only the shape of the payload.

There were no errors in the prompt manager since the request was never sent. I would have preferred if an error did show up in the prompt manager so that, as a prompt engineer, I could tell my developer that someone changed the shape of the payload coming in.

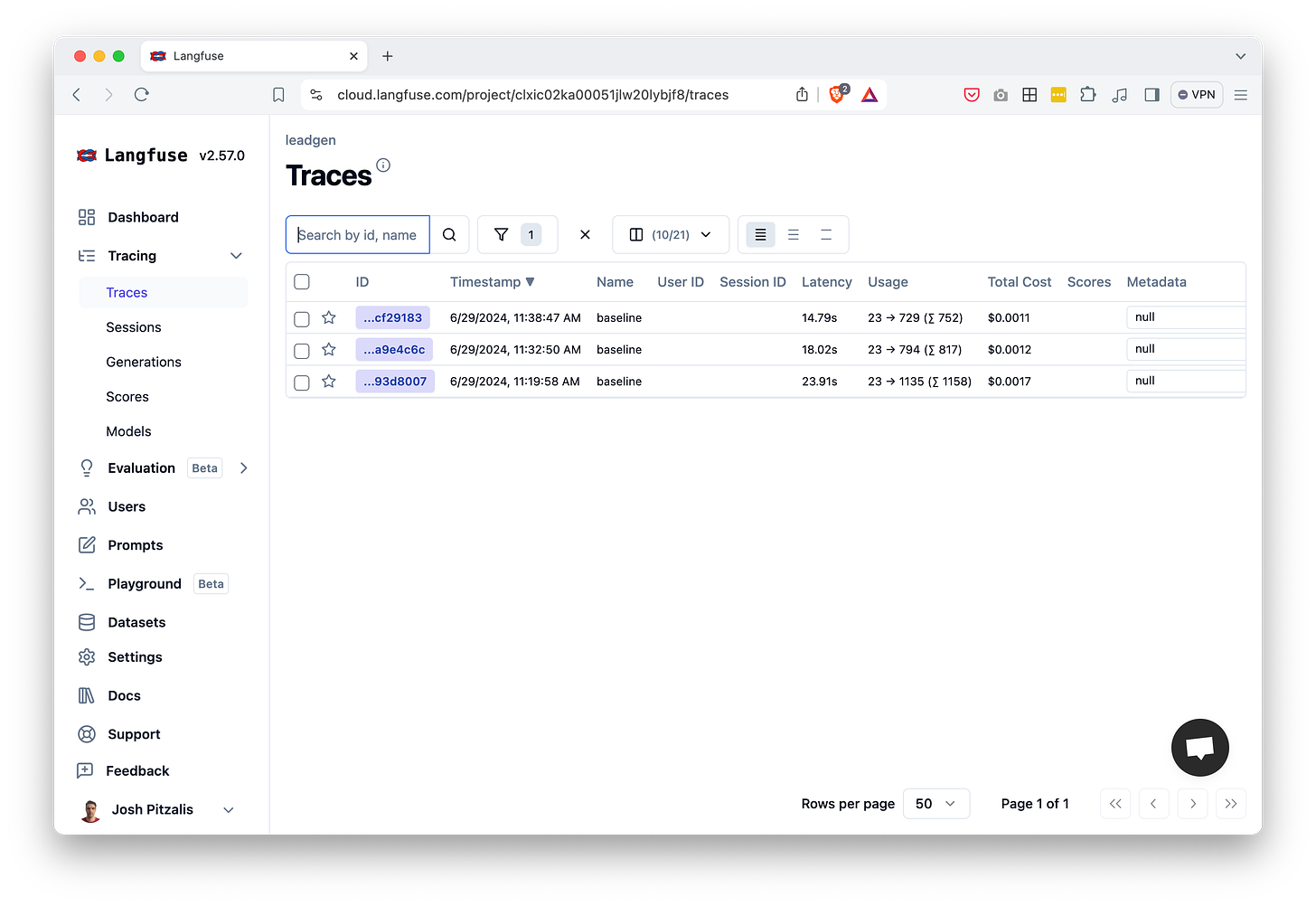

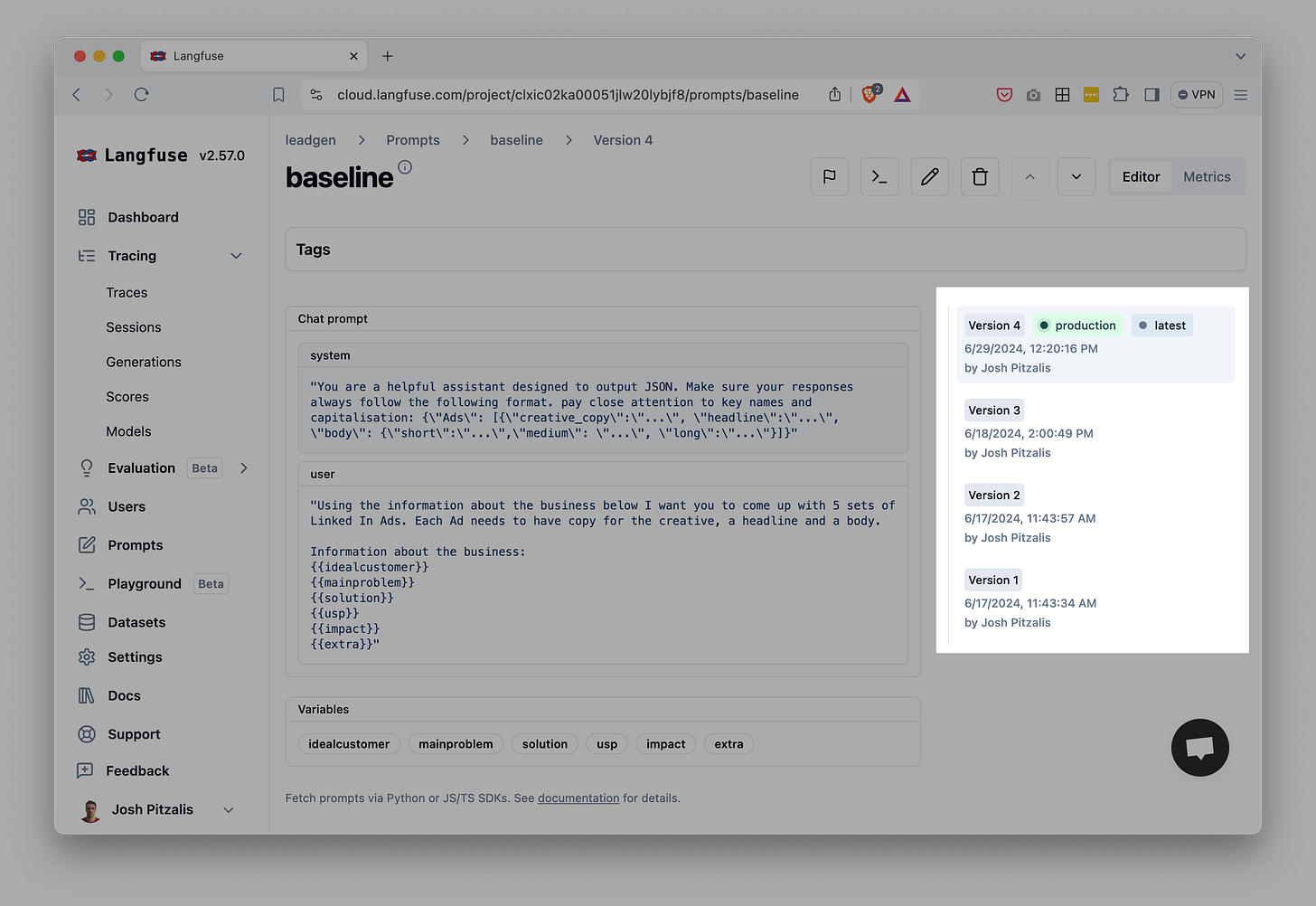

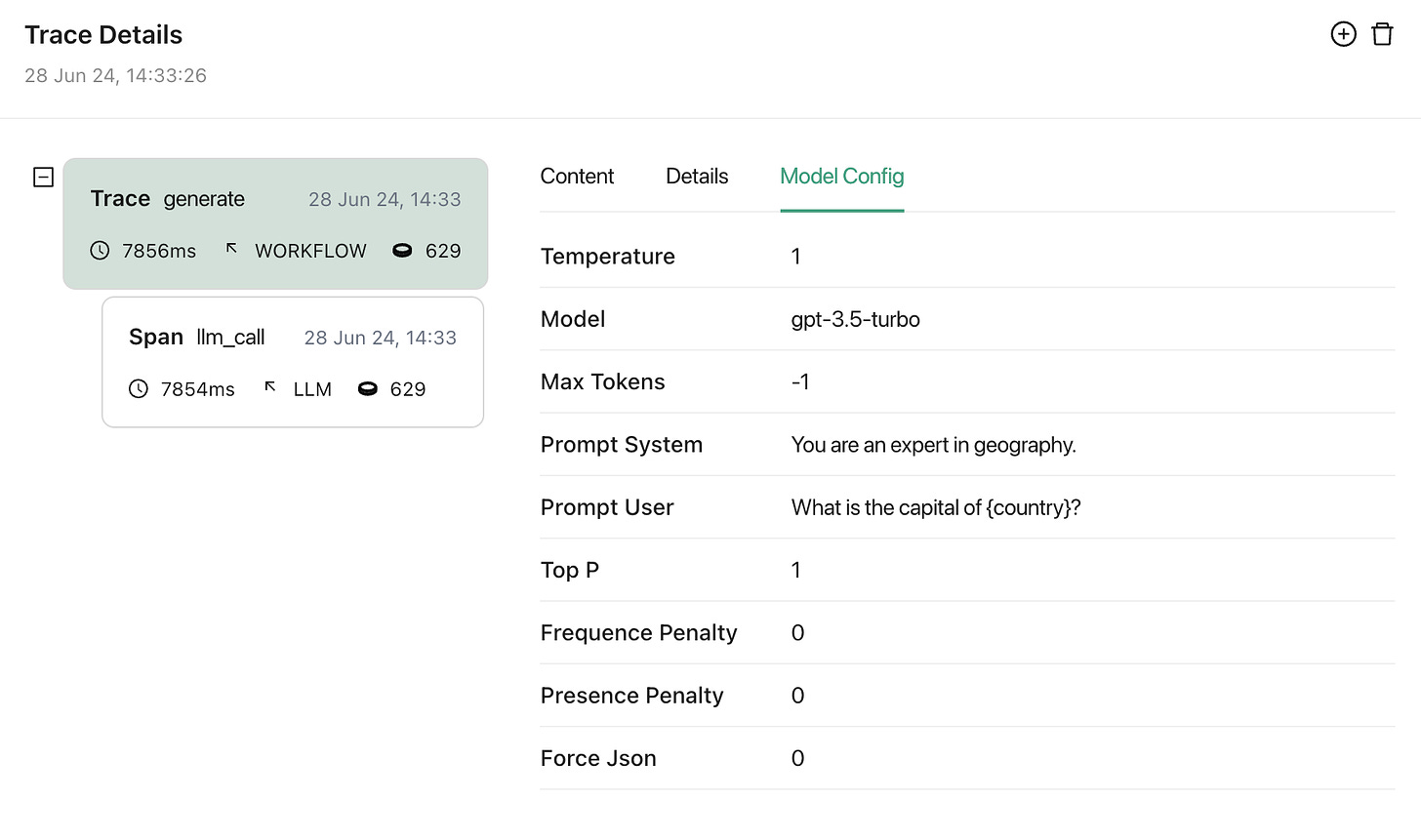

The next prompt management tool I looked at was Langfuse.

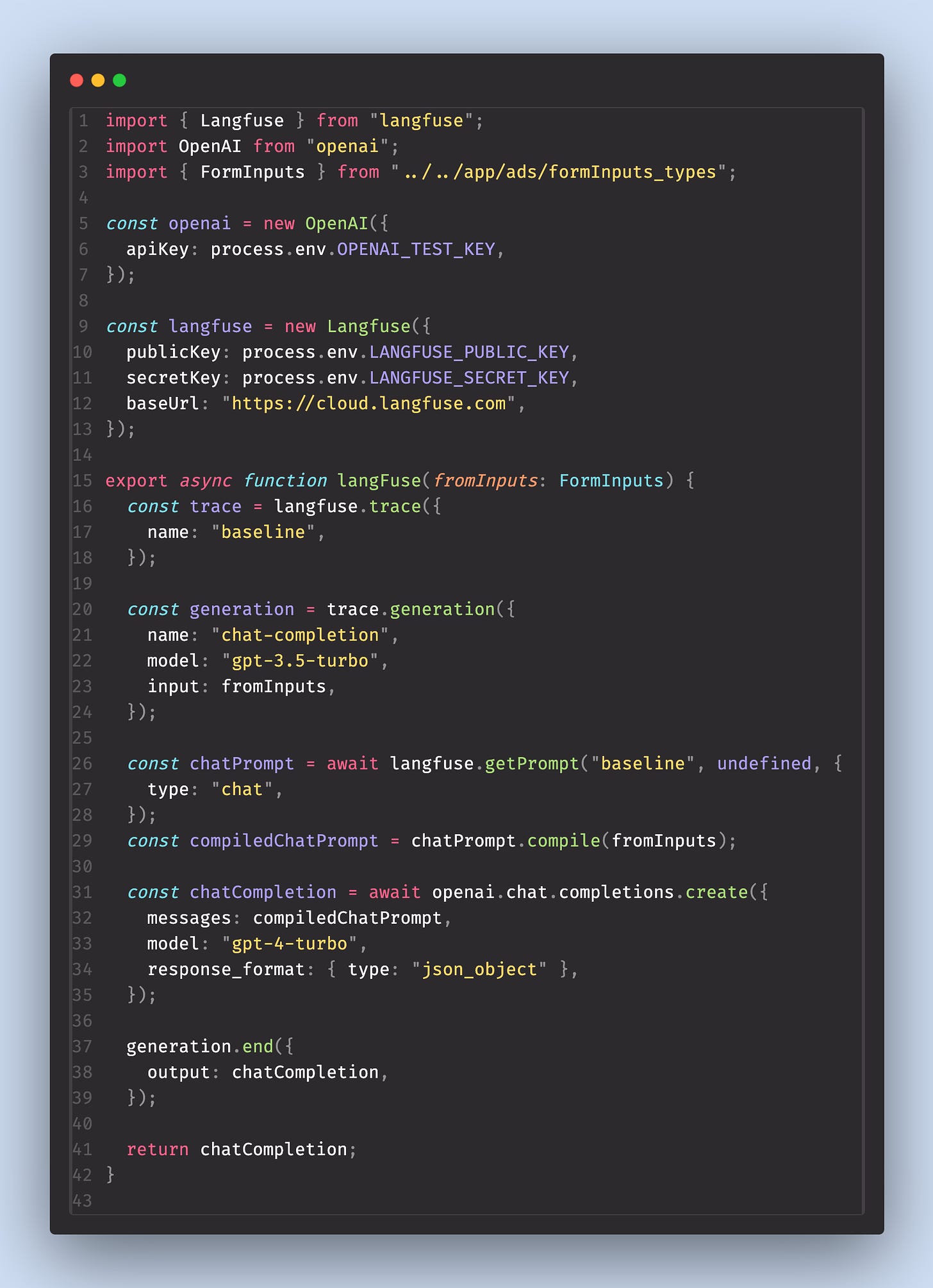

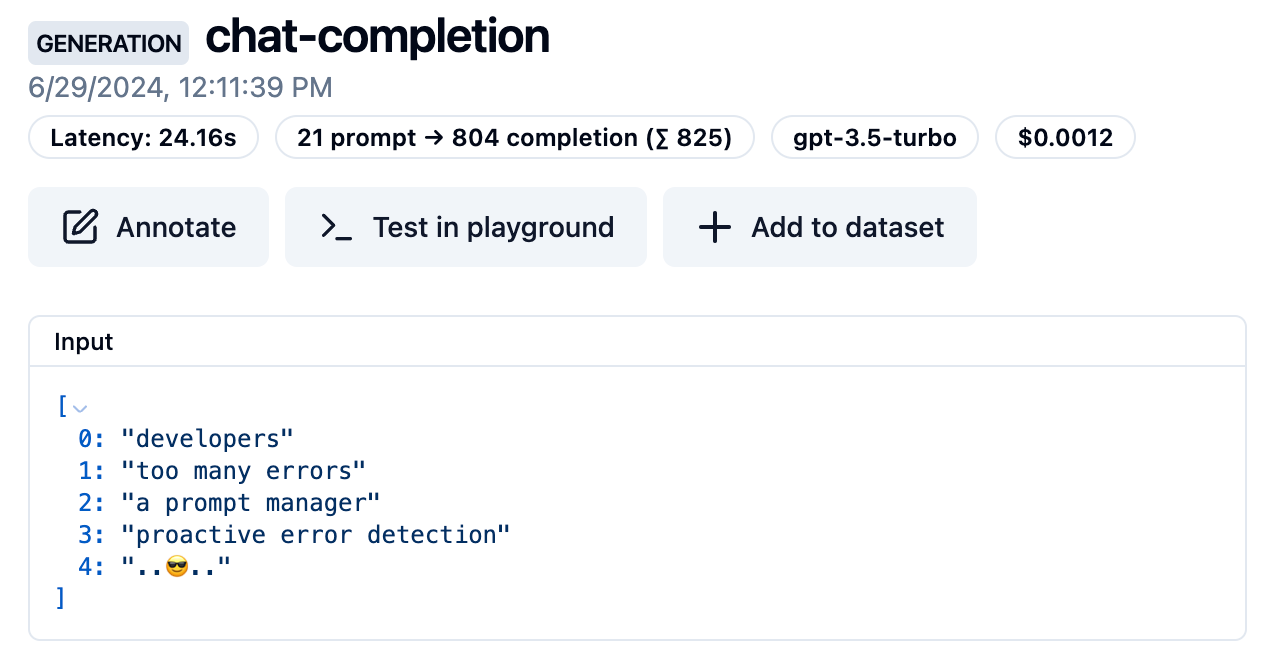

This is the Langfuse trace panel. Rather showing traces on the dashboard for each individual prompt they went with a universal tracing panel for all prompts together. Langfuse offers a comprehensive interface for prompt creation, allowing users to set up and manage prompts efficiently. If there is an error with you prompts, this is where you will be investigating.

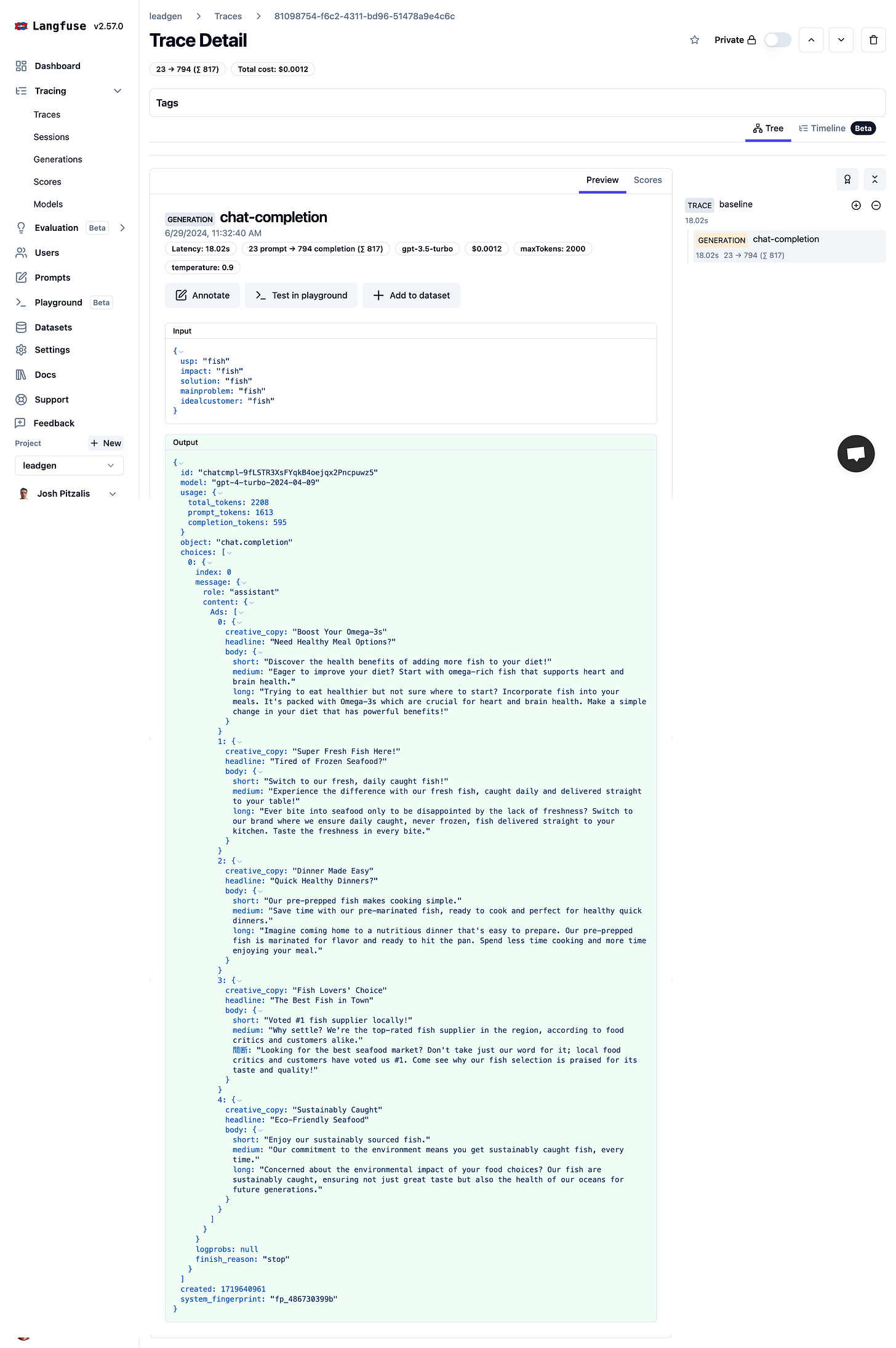

Clicking on an individual trace then opens up a detailed view of that individual prompt call that looks like this:

I understand that it’s just showing me the inputs and the outputs for a single prompt call, but I feel intimidated. This level of overwhelm seems a bit unreasonable given that I just want to know if the call was successful or not.

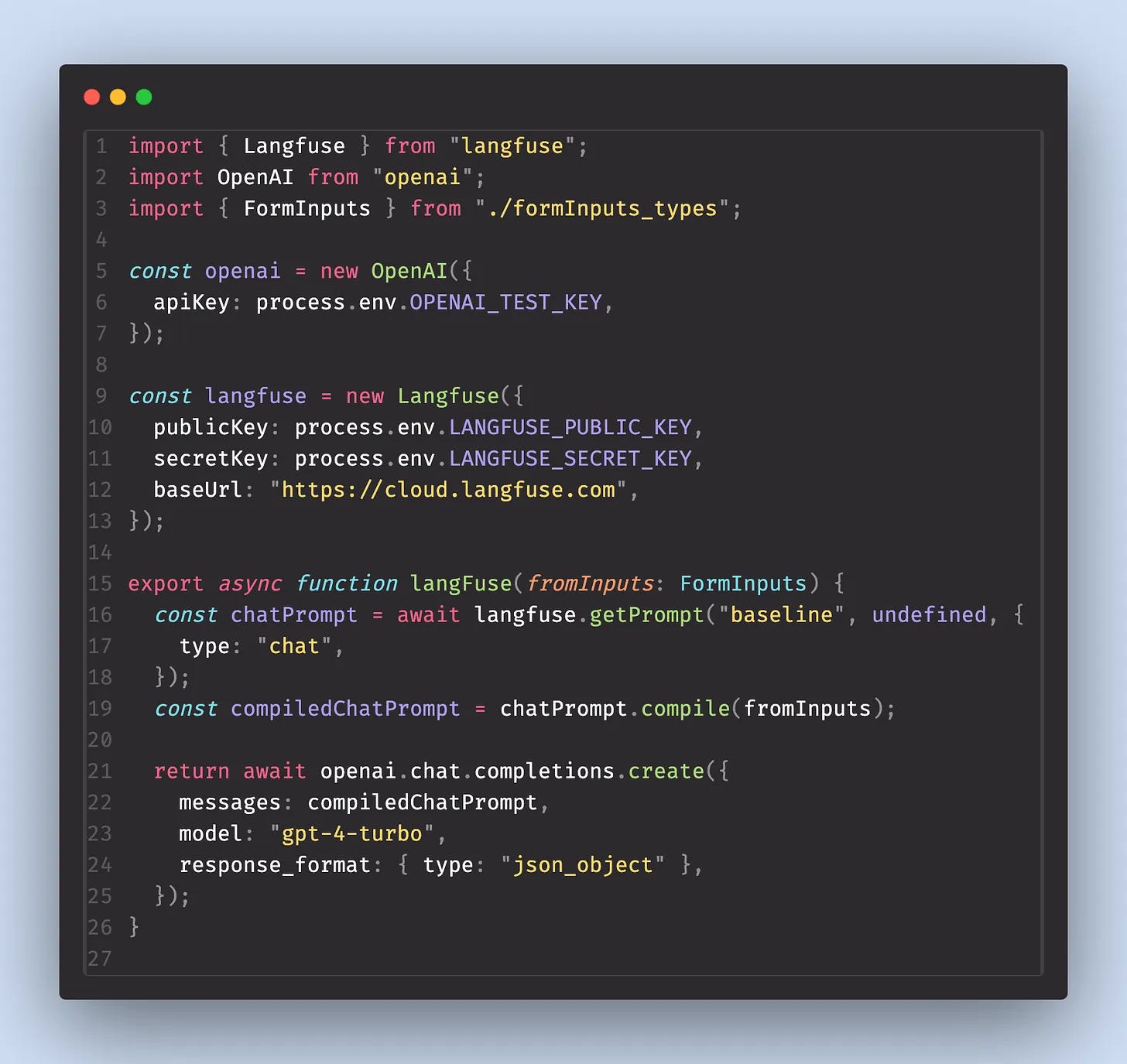

Setting things up to get to this stage was also confusing. The original set up to retrieve a prompt from Langfuse looked like this:

Compare this to the screenshot below with everything I had to change to get calls to show up in the tracing panel. That’s a lot of extra code, given that I am tracing prompt that are stored on Langfuse.

Anyways, now that everything is setup , let see how well the system helps me detect errors when something goes wrong. I switched the shape of the payload to a prompt from an object where each key corresponded to a form field to an array with the same values.

Prompt Manager – No Failure

The prompt ran normally. There was no indication of any errors. It returned a normal response. I could see the array passed in as inputs on the trace but the output had nothing to do with the inputs. ChatGPT just ran the prompt without inputs and made up a response.

Codebase – No Failure

There were no errors in the code base. This was expected prompt ran as normal and just returned a made up response, rather than creating content related to the information I was passing in.

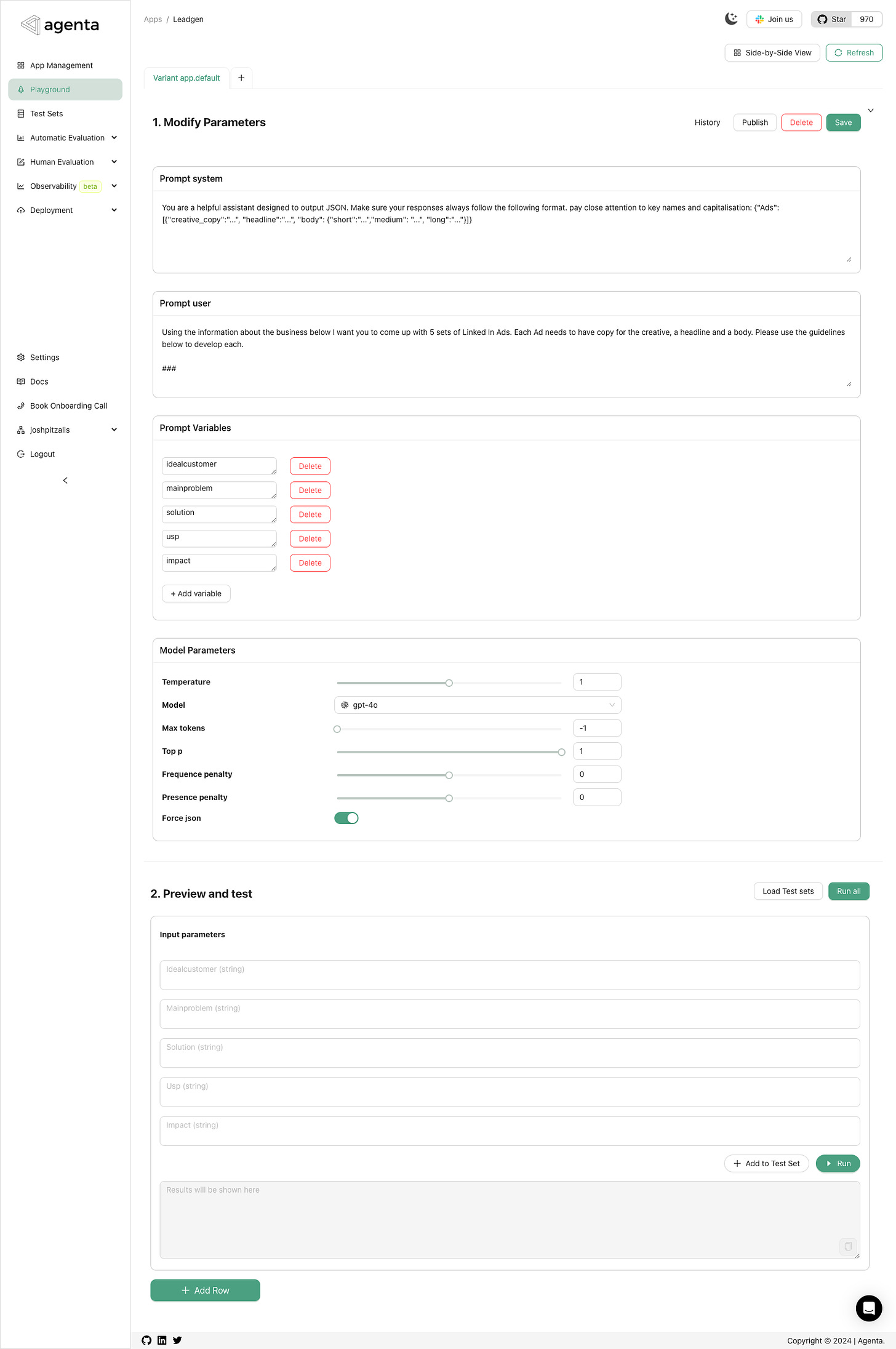

The next app I looked at for prompt creation was Agenta AI

Once you sign up to Agenta and create a new project your dashboard for creating a new prompt looks like this. Agenta AI provides a user-friendly dashboard that simplifies the prompt generation process, making it easy to create and manage new prompts.

I switched the shape of the payload I’m sending Agenta to see how it would handle this type of catastrophic change.

In both cases the call failed silently.

Prompt Manager – The prompt doesn’t get called

The prompt did not run in the prompt manager. They have an observability tab that shows you all of your prompt calls, much like a debugger. I would have liked to see the call with an error associated. This would at least let me tell the development team that something critical changed on their end.

Codebase – The function returns undefined.

There were no errors in the code base. I wrapped the fetch call in a try catch block and still nothing. The function ran normally and just returned undefined.

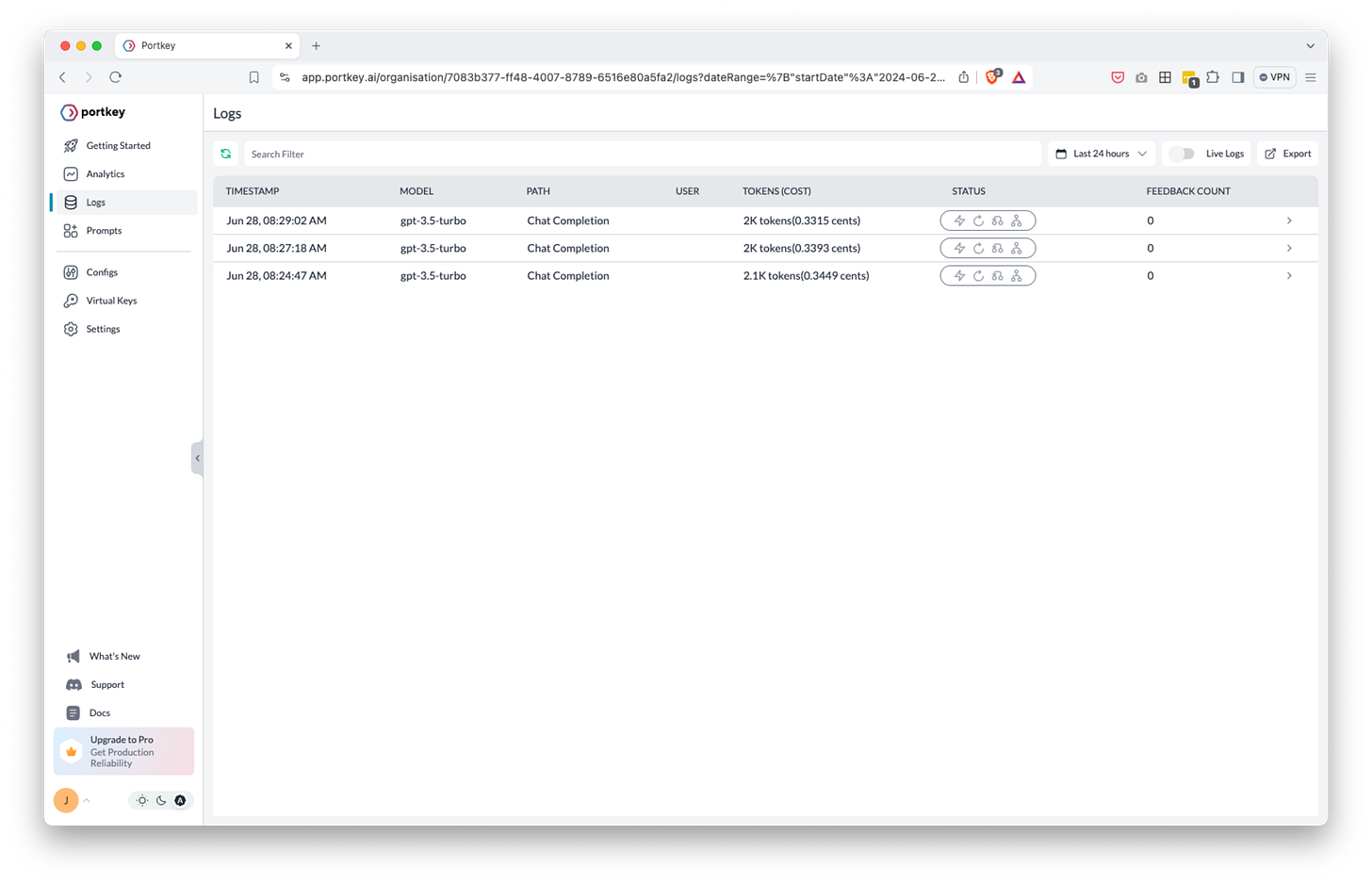

The last app I reviewed was called Portkey.

This is Portkey’s logger. Rather showing logs on the dashboard for each individual prompt they went with a universal logging panel for all prompts together. Portkey features a seperate prompt library, where users to organize and manage individual prompts. If there is an error with you prompts, this is where you will be investigating.

Same a before, I switched the shape of the payload to a prompt from an object to an array with the same values.

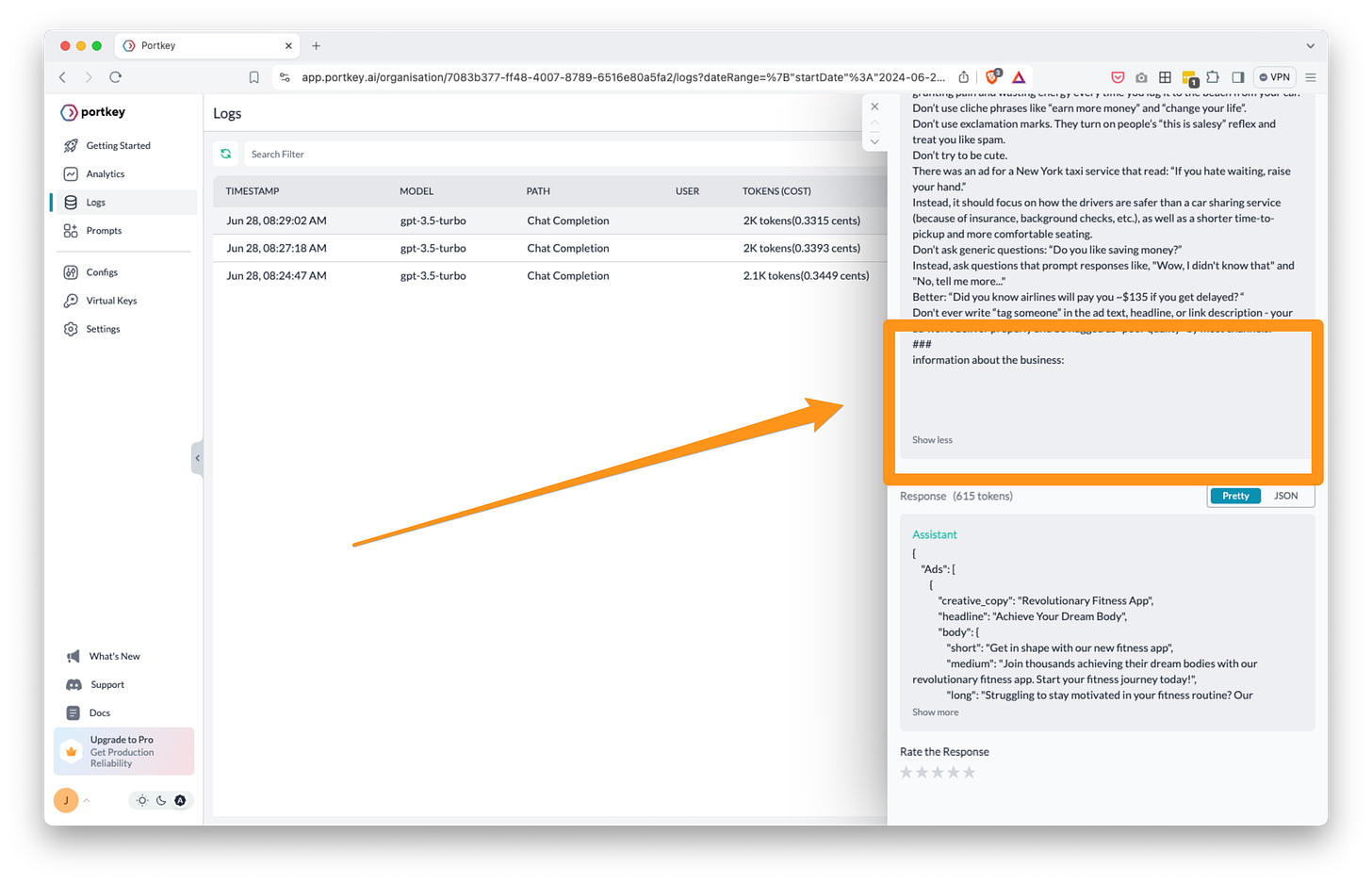

In Portkey, if you click on any of the logs it brings up a side panel with a preview of the request and the response.

Prompt Manager – No errors

The prompt ran normally. There was no indication of any errors. Previewing the request, it look like the prompt just ran with blank input variable values and then returned a made-up response.

Codebase – No errors

There were no errors in the code base. The prompt ran as normal and just returned a made up response about a fitness app, rather than creating content related to the information I was passing in.

In summary:

- PortKey: No errors reported. The prompt ran with blank input values and returned a made-up response.

- Agenta AI: The call failed silently. No errors in the prompt manager or codebase.

- LangFuse: The prompt didn’t get called. No errors were shown in the observability tab.

- PromptLayer: Threw a “prompt not found” error in the console, but no errors in the prompt manager.

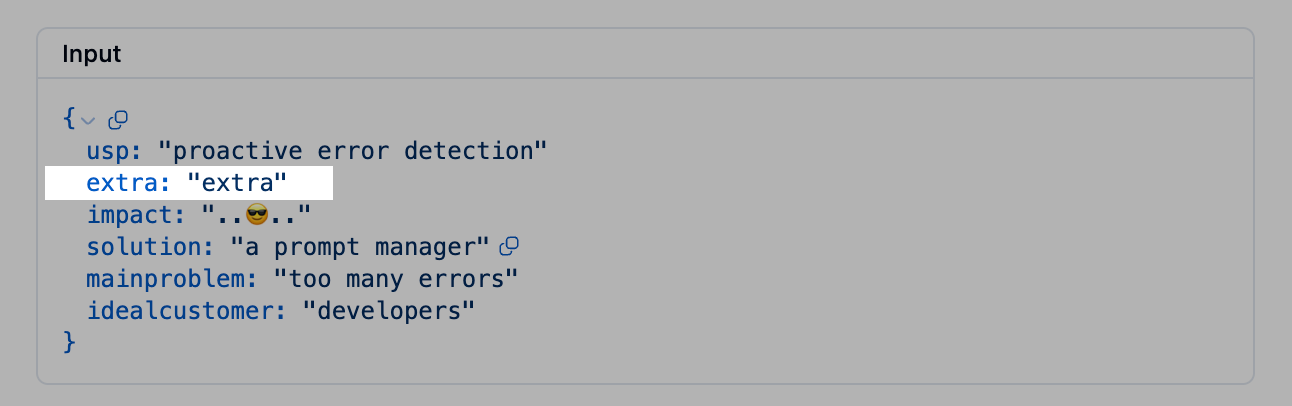

Sending too much information 🙈

Next, I went back to PromptLayer and tried adding extra key to the payload to see if it would throw an error. To handle excess data effectively, it is crucial to organize prompts in a way that ensures all necessary information is utilized. The system worked fine worked fine. There was no awareness of the excess data now passing to the request. I tried deleting a variable in the prompt manager and it had the effect.

I think this is a dangerous case to ignore because it is going to happen so often. The development team updates the payload with new data but the prompt team forgets to consume it. Conversely, the prompt team deletes an input variable but the dev team doesn’t get the message and they continue to maintain everything needed to pass in the unused information. I would have preferred to see both the development and the prompting team being alerted to the excess data.

I then replicated the same scenario with Langfuse.

Prompt Manager – The prompt worked as normal, this time it did return a response that was relevant to the inputs. But it just ignored the extra information. That said, I was able to see the extra information passed in though, but nothing to alert me to the fact that the prompt was receiving information that it was not using.

Codebase – Same, no errors or warnings, the redundant data was just ignored.

Agenta AI responded similarly.

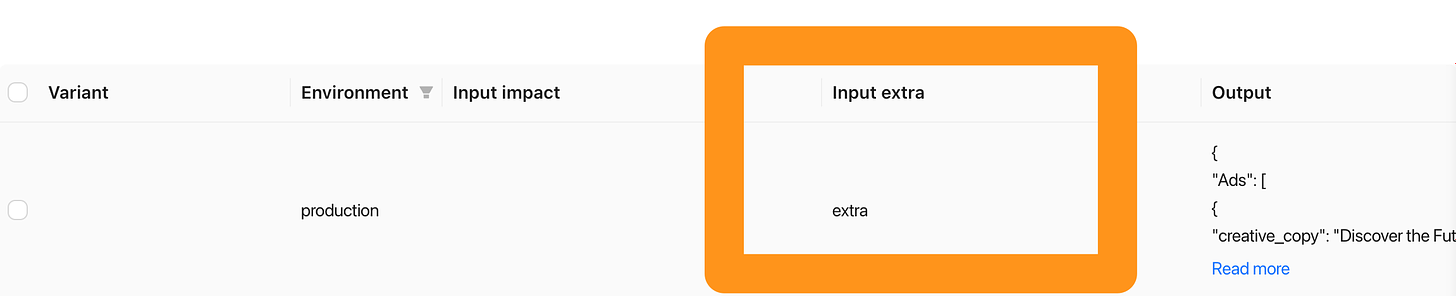

Prompt Manager – The prompt worked as normal and just ignored the extra information. However it did show the extra input variable being passed in in the observability panel.

Codebase – No errors or warnings, the redundant data was just ignored.

Finally, I replicated the same error in PortKey.

Prompt Manager – The prompt worked as normal and just ignored the extra information.

Codebase – Same, no errors or warnings, the redundant data was just ignored.

As a developer if I was sending over the necessary information to a prompt manager and it was not being used, i’d want to know about that. Especially if I’m trying to help the prompt team debug a problem where the prompts are not working as expected. At the very least I would expect the prompt manager on their end to let them know they are receiving extra information that is not being used.

- PortKey: Worked normally, ignoring extra information. No warnings provided.

- Agenta AI: Worked normally, ignoring extra information. The extra input was visible in the observability panel.

- LangFuse: Worked normally, ignoring extra information. The extra input was visible in the tracing panel.

- PromptLayer: Worked fine with no awareness of excess data.

Not sending enough information 🤐

Next I deleted one of the keys in the payload to PromptLayer and, surprisingly, there were no issues. The prompt just ran as normal, and did its best to make up for the missing context.

When dealing with natural language prompts, ensuring that all necessary information is provided is critical for maintaining the quality of the output. This is a particularly dangerous case because the quality of a completion will drop significantly if you start depriving it of critical information. The problem here is that the GPT will always try and close the gap for you. Deprive a GPT of enough information, and sometimes it will tell you it needs more context or it will ask for a clarification. But, in a case like this, where I’m sending in data from 5 different form fields, GPT will never complain about one missing bit.

The solution is to just make inputs mandatory or optional. When I added an input variable to the prompt in PromptLayer, or changed the name of an input, it failed silently in the same way. There was no indication to the prompt team that the prompt was not getting all the information it needed.

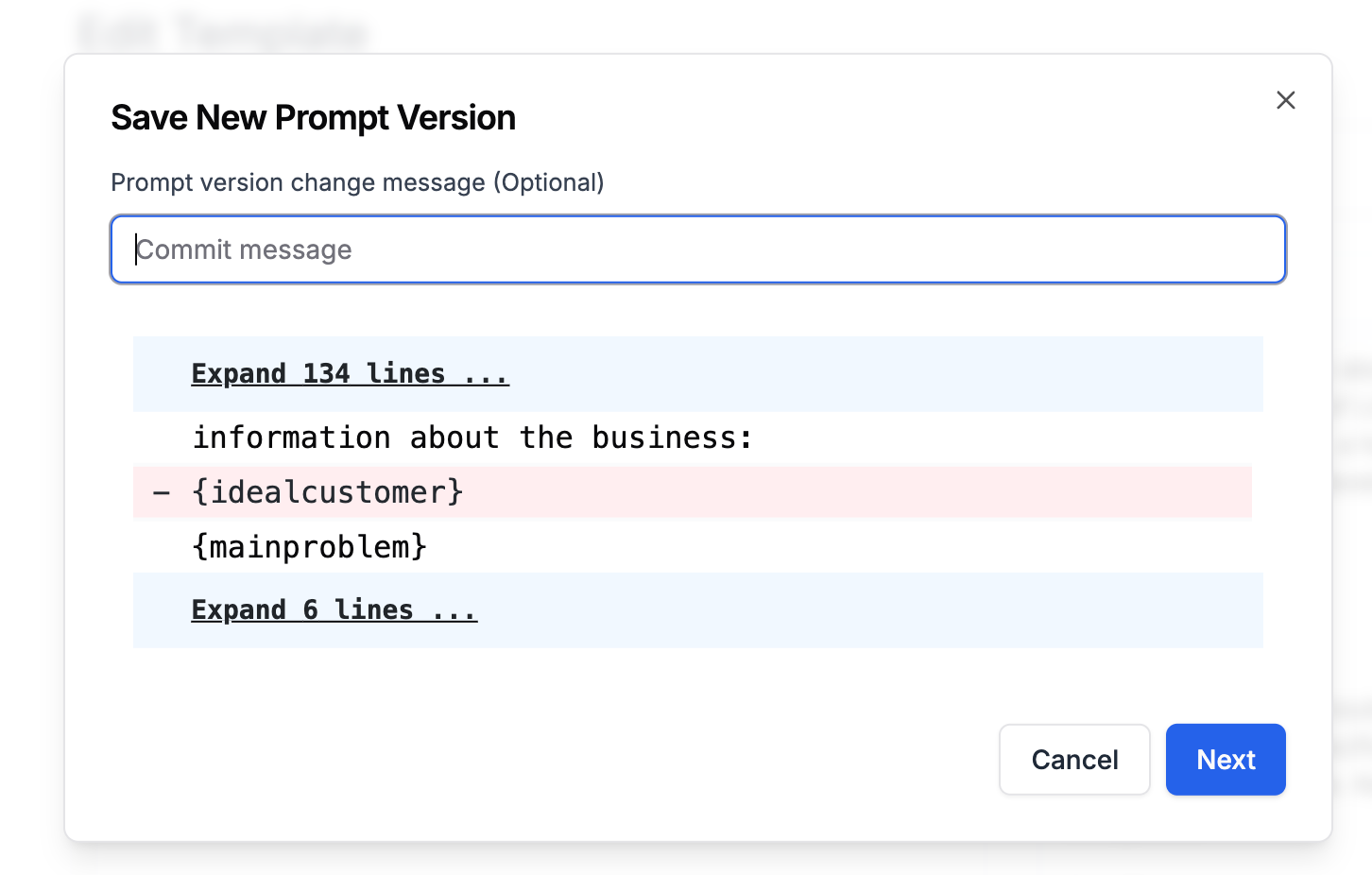

The way that PromptLayer handles these types of issues is to make you confirm your changes with a commit message (followed by the option to run evals). This is great for figuring out who broke the system, but it’s not great at helping someone understand how they’re breaking the system when they’re breaking it. This approach makes error handling entirely the user’s responsibility, when it could be more of a shared responsibility.

Langfuse

I added a new input variable to the prompt in the prompt manager to see if calling a prompt with insufficient data would throw any kind of warning.

Prompt Manager – The prompt worked as normal and just ignored the missing information. I could not find any way to mark a input variable as required. They all just seem to be optional by default.

Codebase – Same, I wrapped the call in a try catch block and there were no errors or warnings in my console.

Agenta AI

I added a new input variable to the prompt in the prompt manager to see if calling a prompt with insufficient data would throw any kind of warning.

Prompt Manager – The prompt worked as normal and just ignored the missing information. I could not find any way to mark a input variable as required. they all just seem to be optional by default.

Codebase – Same, there were no errors or warnings in my console.

PortKey

I added a new input variable to the prompt in the prompt manager to see if calling a prompt with insufficient data would throw any kind of warning.

Prompt Manager – The prompt worked as normal and just ignored the missing information. I could not find any way to mark a input variable as required. they all just seem to be optional by default.

Codebase – Same, I wrapped the create method of the portkey.prompts.completions API in a try catch block and there were no errors or warnings in my console.

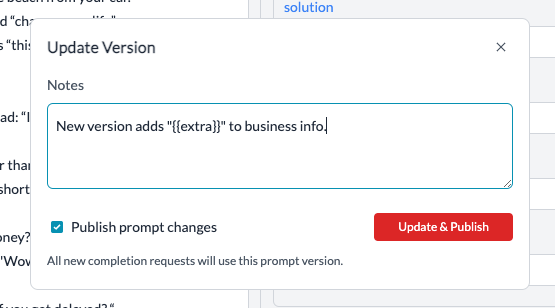

When I added the extra input variable in the prompt manager it did ask me to make a note of the changes made before updating the prompt. In a team scenario, I can see who made this note to track down who made the breaking change in a future review.

In summary:

- PortKey: Worked normally, ignoring missing information. No way to mark input variables as required.

- Agenta AI: Worked normally, ignoring missing information. No way to mark input variables as required.

- LangFuse: Worked normally, ignoring missing information. No way to mark input variables as required.

- PromptLayer: Ran normally, attempting to make up for missing context. No way to make inputs mandatory.

Rollback Capabilities

Rolling back to a previous version of a prompt wasn’t possible within PromptLayer; it had to be done in the source code by specifying the version of a prompt you want. Managing prompt versions effectively is crucial for maintaining the integrity of the prompt management system. I didn’t understand this and instead tried to delete a version in PromptLayer, but ended up deleting the whole template instead. Losing all of the changes I’d made to the prompt over the past week. That’s my fault though.

In a situation where the prompting team publishes a version riddled with errors, and they know it, not giving them the option to roll things back and forcing the developers to make a changes kind of makes the whole point of a prompt manager redundant.

Langfuse

I could see the different version of my prompts in the prompt manager but there was no way to roll back to a previous version now that I had made a mess of the latest version with this extra input variable.

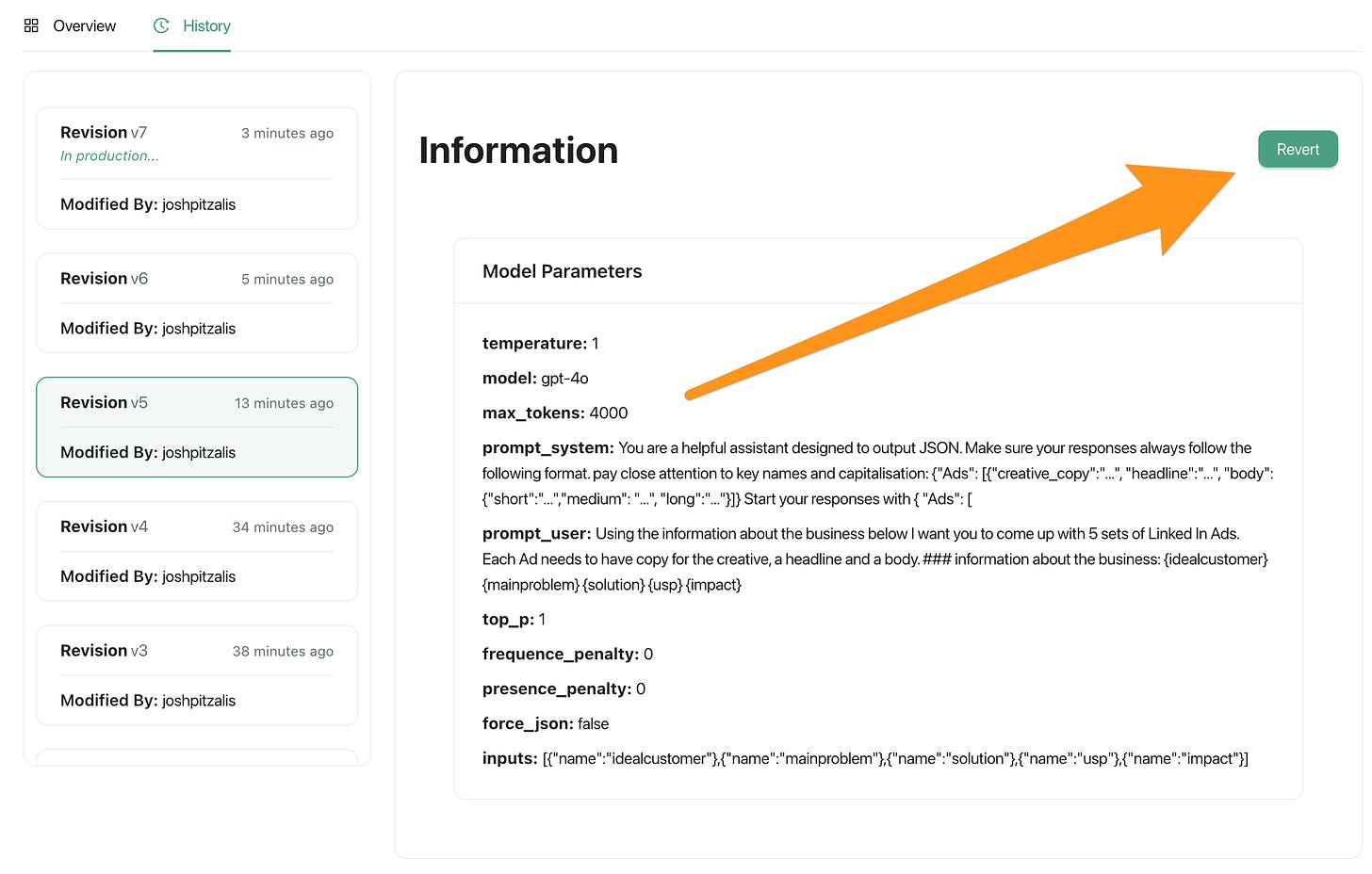

Agenta AI

Rollback to a previous version of the prompt once I’d made a mess of thing was super easy. They have a history tab on the endpoints section under the deployment tab that lets you revert to which ever version you want.

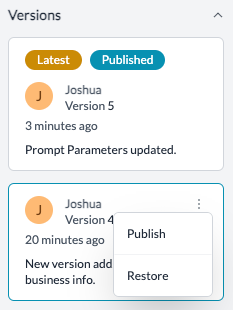

PortKey

PortKey made it easy to rollback to a previous version of prompt when I tried to undo the mess I had made. On the dashboard for each individual prompt there is a side panel with all the version and there’s dropdown that lets you publish whichever version you want.

In summary:

- PortKey: Excellent rollback functionality. Easy to revert to previous versions from the dashboard.

- Agenta AI: Good rollback functionality with a history tab for reverting to previous versions.

- LangFuse: Not specifically mentioned in the provided information.

- PromptLayer: Poor rollback functionality. Rollback had to be done in the source code, not in the prompt manager.

Handling Incomplete JSON Responses ⚠️

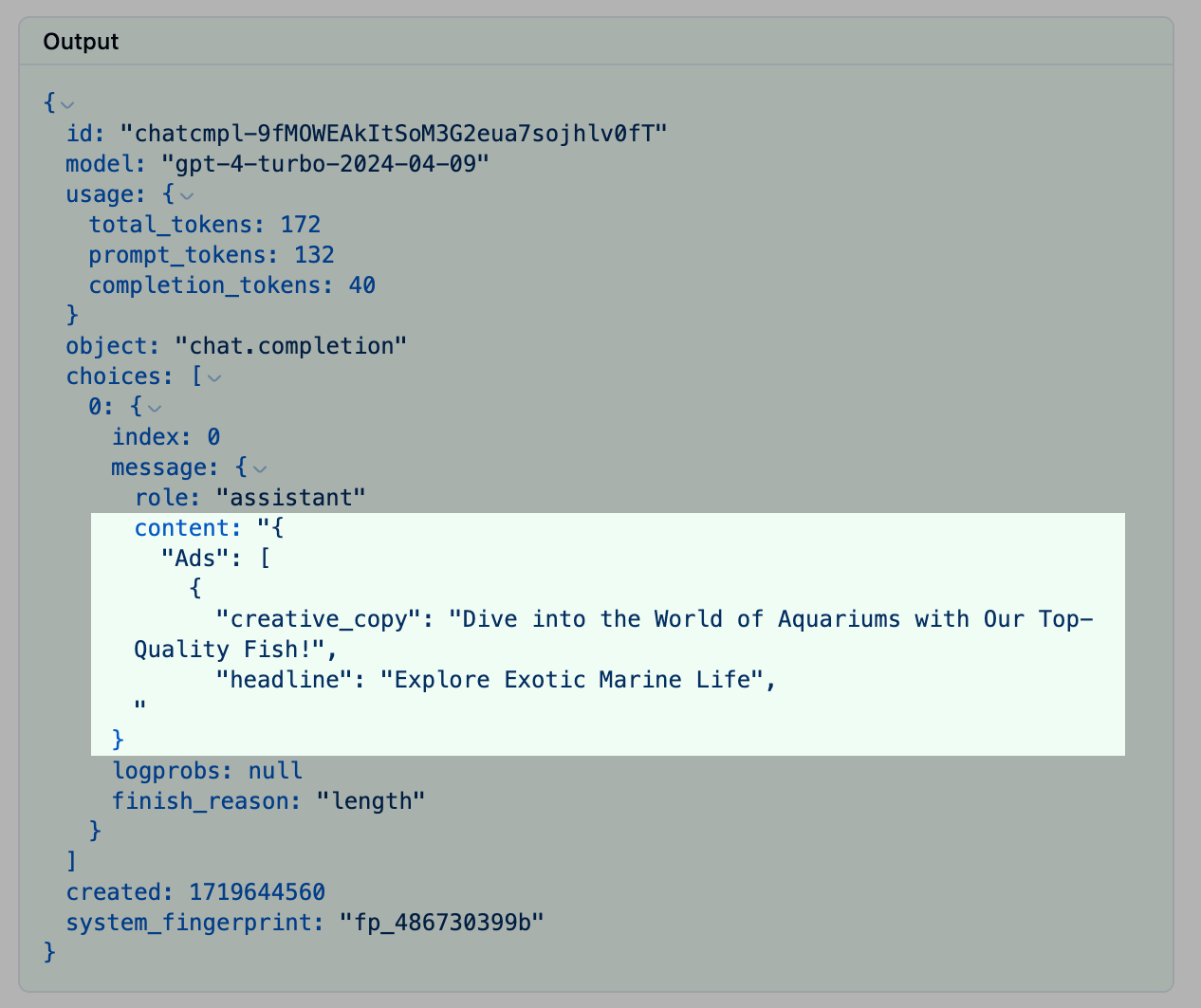

The last problem I had was setting the token count too low when consuming responses in JSON. Effective prompt engineering is essential to avoid issues like incomplete JSON responses and ensure the reliability of the system. The bottom of the PromptLayer dashboard screenshot (at the beginning of this post) is their debugger. It gives you a little response preview for each prompt. In the image below you can see that the JSON response just ends abruptly. This causes all kinds of nasty errors in the app but there’s no error in the debugger. The prompt team wouldn’t even know they’re sending back invalid JSON. This is one of those areas where I feel like the prompt managed could share a little of responsibility when it comes to avoiding avoidable errors.

Langfuse

Since Langfuse is a compiler, there is no way to adjust temperature and token settings in the prompt manager. I have to hardcode these kinds of changes into my api call in source code. This is unfortunate since the whole point of a prompt manager, from my perspective at least, is to give non-technical contribute a space to edit and optimize your prompts. Without access to configuration options like token count and temperature settings, they will have one arm tied behind their back.

regardless I hardcode the token limit to 40 and set teh repsonse format to JSON which would almost certainly force ChatGPT to return invalid JSON. I wanted to see if the Langfuse tracer would alert me to these kinds of detectable errors.

The response was incomplete JSON.

The tracer even gave the response a happy green background as if to say everything was fine, despite the finish reason being length in the response output.

On the developer end I do get a response object that has the headers. So I would be able to debug this and see that the reason it finished because of a length limitation (“finish_reason”: “length”)

However I would have liked to also see this in the prompt manager since this is clearly an avoidable and easily detectable error but I was not alerted to it in any way.

Agenta AI

I tried to set the response output to JSON and then reduce the max tokens down the force the JSON to be returned incomplete, just to see how it would handle the error. Unfortunately it told me JSON mode doesn’t work with gpt-4o (which it does) and the tokens setting was also non response since I set the token limit to 4000 but they are showing up as -1 in the observability panel trace details (despite returning plenty of tokens)

Returns Prompt Templates with Incomplete JSON ⚠️

I was happy to see that the advanced parameters in PortKey let you set the response format to JSON. So I set the response format to JSON and then reduced to max tokens down so that it could not complete the JSON output I was expecting.

The response was incomplete JSON.

On the developer end I do get a response object that has the headers. So I would be able to debug this and see that the reason it finished because of a length limitation (“finish_reason”: “length”)

However I would have liked to also see this in the prompt manager since this is clearly an avoidable and easily detectable error but I was not alerted to it in any way.

In summary:

- PortKey: Returned incomplete JSON without any warnings in the prompt manager.

- Agenta AI: JSON mode reported as not working with GPT-4, despite it being supported.

- LangFuse: Not specifically tested in the provided information.

- PromptLayer: Returned incomplete JSON without any errors in the debugger.

Overall

- Error Detection: Generally poor across all platforms. Most errors went undetected or unreported. Prompt engineers would greatly benefit from improved error detection capabilities in these tools.

- Payload Handling: None of the platforms handled payload shape changes well.

- Input Validation: No platform offered a way to mark inputs as required or validate input data.

- JSON Handling: Poor handling of incomplete JSON responses across the board.

- Rollback Functionality: PortKey and Agenta AI offered the best rollback capabilities.

Conclusion

While these prompt managers offer valuable features, as a developer, if I’m going to working with a client or a product team via a prompt manager then I want to be relatively confident that obvious error detection is covered for things like payload changes, missing information, or excess data. Implementing more proactive error detection, clearer communication of issues, and user-friendly version control would significantly enhance the reliability and usability of the system.

This teardown was conducted with respect for each of the product teams and their product, I hope this was useful to them, and I welcome any feedback or updates to the post.